Ice Volcano on Ceres Hints at Hidden Ocean

TL;DR: Machine learning has revolutionized exoplanet detection, with AI systems discovering planets human astronomers missed and processing millions of observations autonomously.

Within the next decade, artificial intelligence will have catalogued more exoplanets than all human astronomers combined throughout history. That transformation isn't speculative - it's already underway. Right now, machine learning algorithms scan telescope data streams around the clock, flagging planetary candidates that would take human teams months to identify. These systems don't tire, don't blink, and increasingly, they're spotting worlds that our own eyes miss completely.

In 2017, Google's AI network uncovered two exoplanets lurking in old Kepler telescope data - planets named Kepler-90i and Kepler-80g that human analysts had overlooked. The neural network had been trained on 15,000 pre-labeled signals and achieved 96% detection efficiency on known exoplanets. But here's what made headlines: Google's AI had only analyzed 10% of Kepler's 150,000 monitored stars.

That discovery marked a watershed moment. For the first time, machines demonstrated they could do more than assist human astronomers - they could actively discover worlds we'd never have found on our own. If AI could find hidden planets in well-studied data, what else were we missing?

Fast forward to today, and the picture looks radically different. NASA announced that a deep learning method added 301 planets to Kepler's catalog, validating candidates that might otherwise have remained uncertain for years. Machine learning hasn't just augmented exoplanet science - it's fundamentally restructured how we approach the search for other worlds.

Before machine learning entered the picture, exoplanet hunting resembled sorting through mountains of sand to find diamonds. Human analysts would manually inspect light curves - graphs showing a star's brightness over time - looking for the telltale dip that signals a planet crossing in front of its host star. With missions like Kepler monitoring hundreds of thousands of stars simultaneously, the data deluge quickly overwhelmed human capacity.

Traditional methods relied on threshold algorithms and statistical tests designed by astronomers to flag obvious transit signals. These rule-based systems worked well for textbook cases: clear, periodic dips in brightness with minimal noise. But real telescope data is messy. Stellar activity creates false positives, instrument systematics introduce artifacts, and the most interesting planets - those in the habitable zone around Sun-like stars - often produce signals so faint they hide beneath the noise floor.

Enter machine learning. Unlike hand-crafted algorithms that follow rigid rules, neural networks learn patterns directly from data. Convolutional neural networks can process raw light curves the same way they analyze images, identifying subtle transit signatures that traditional methods miss. Recurrent neural networks capture temporal patterns across thousands of data points, distinguishing genuine planetary transits from instrumental glitches or stellar variability.

The technical workflow represents a fundamental reimagining of telescope operations. Modern ML-assisted systems operate in stages: data ingestion, feature extraction, classification, and prioritization. When a telescope like TESS captures photometric measurements, those raw data streams feed directly into preprocessing pipelines that normalize brightness values and segment the time series into manageable chunks.

Feature extraction algorithms then compute statistical descriptors - transit depth, duration, periodicity, signal-to-noise ratio - and feed these into trained classifiers. Gradient boosting machines and random forests excel at this stage. For instance, researchers using gradient boosting models on simulated observatory data achieved precision exceeding 99.8%, with recall rates above 99.7%.

What makes this approach revolutionary isn't just accuracy - it's autonomy. Once trained, these models run continuously, scanning incoming data and updating candidate lists in near-real-time. Astronomers no longer wade through every light curve manually. Instead, they focus on high-priority targets flagged by algorithms, conducting follow-up observations and validation studies.

The discovery of Kepler-90i stands out because it completed an eight-planet solar system, matching our own. Kepler-1649c, an Earth-size world in its star's habitable zone, was found lurking in previously flagged false positives after a machine learning reanalysis. Human reviewers had initially dismissed it, but the algorithm recognized the faint periodic signal as genuine.

Machine learning methods have also revolutionized radial velocity analysis, where astronomers measure stellar wobbles caused by orbiting planets. Vision transformers - a newer class of neural architecture - now process radial velocity spectra with unprecedented sensitivity, detecting planetary signals buried in stellar noise that conventional techniques couldn't resolve.

Even more intriguing is the application to direct imaging. Transformer architectures applied to high-contrast imaging data have dramatically improved detection limits, identifying faint planetary companions that traditional image processing would miss.

The diversity of these discoveries underscores a broader point: machine learning isn't a single tool, but an entire toolkit applicable across detection methods - transit photometry, radial velocity, direct imaging, even gravitational microlensing.

How do AI methods stack up against traditional approaches? Detection efficiency - the percentage of real planets identified - is where machine learning shines. Studies using Kepler data report detection efficiencies above 96%, compared to roughly 70-85% for manual vetting depending on signal strength.

False-positive rates tell another story. Early automated systems flagged many spurious candidates. Modern deep learning classifiers have flipped this script. Hybrid architectures achieve false-positive rates below 5%, comparable to or better than expert human reviewers. Gradient boosting machines trained on comprehensive feature sets push false-positive contamination under 1% in some applications.

Processing speed represents perhaps the starkest contrast. A human analyst might review a few hundred light curves in a day. A trained neural network can process millions of observations in hours. The Vera C. Rubin Observatory, once operational, will generate 400,000 to 5 million alerts per night. No human team could possibly vet that volume, but machine learning pipelines can triage the flood.

Yet humans maintain critical advantages. We excel at recognizing novel phenomena that fall outside training distributions. If a completely unexpected signal appears - something the algorithm was never trained to identify - human intuition and domain expertise become indispensable. The relationship isn't competitive; it's symbiotic.

Error handling offers another dimension. Traditional methods produce explainable decisions based on defined thresholds. Neural networks, especially deep architectures, operate as "black boxes." Feature importance analysis using random forests reveals which input variables drive decisions, but the internal logic of deep networks remains opaque.

This interpretability gap matters for scientific validation. When you publish a planetary discovery, you must convince skeptics that the signal is real. A human-readable decision tree provides transparent justification. That's why modern pipelines combine ML classification with traditional validation steps - spectroscopic follow-up, multi-band photometry, dynamical modeling.

Let's get under the hood. A convolutional neural network designed for light curve analysis typically consists of multiple convolutional layers that act as feature detectors, scanning the input for patterns like periodic dips or characteristic transit shapes. These features feed into fully connected layers that combine information and output a probability: planet or non-planet.

Training requires labeled examples - thousands of confirmed planets and validated false positives. The network adjusts internal parameters to minimize classification errors on this training set, learning which patterns correlate with true detections. Crucially, the model also learns to ignore instrumental noise, stellar spots, or eclipsing binary stars that mimic transits.

Recurrent neural networks add temporal memory, essential for identifying periodicity. An RNN processes a light curve sequentially, maintaining a hidden state that encodes information about previous time steps. This architecture naturally captures repeating transit events, even when observation gaps or data quality variations complicate the signal.

For next-generation surveys, the challenge scales beyond individual light curves. Systems must handle real-time classification of transient events - sudden brightening or dimming that could indicate anything from a supernova to a microlensing event. These pipelines couple Gaussian Process models for smooth interpolation with boosted classifiers that achieve 95% precision while maintaining 72% recall.

Another cutting-edge approach involves vision transformers. Originally developed for natural language processing, transformers excel at capturing long-range dependencies in sequential data. Applied to stellar spectra, transformers identify subtle periodic Doppler shifts caused by orbiting planets, outperforming traditional cross-correlation methods.

This technological shift carries profound implications beyond astronomy. For decades, exoplanet discovery remained the province of a small elite - teams with access to major telescopes and specialized expertise. Machine learning is democratizing that landscape.

Amateur astronomers equipped with backyard telescopes and open-source ML tools can now contribute meaningfully. Citizen science platforms already engage thousands of volunteers in classifying light curves, and AI augmentation amplifies their impact. An amateur using a trained neural network can analyze data as effectively as a professional.

This democratization extends to developing nations and institutions without massive budgets. Cloud-based ML platforms and pre-trained models mean a student anywhere can download telescope data and run state-of-the-art detection algorithms on a laptop. The barriers to entry - computational power, algorithmic expertise, data access - are collapsing.

The ripple effects touch education and public engagement. When middle school students can train a simple neural network to identify exoplanets, science becomes tangible and accessible. That shift matters for inspiring the next generation of scientists.

But there's a darker edge. As detection becomes automated and scalable, we risk drowning in discoveries without sufficient follow-up. Confirming an exoplanet candidate requires expensive telescope time. If AI generates thousands of high-quality candidates annually, the bottleneck shifts to confirmation resources. We might face a paradox of abundance: too many planets to study properly.

The promise of AI-driven detection extends well beyond increasing discovery rates. Finding Earth-size planets in habitable zones requires detecting signals so faint they're borderline undetectable with traditional methods. Machine learning pushes sensitivity thresholds lower, uncovering the exact planetary population most relevant to the search for life.

Characterization benefits too. Once you've identified an exoplanet, understanding its atmosphere and composition demands intricate analysis of spectroscopic data. Neural networks trained on simulated planetary atmospheres can rapidly infer atmospheric chemistry from transit spectra, guiding observational strategies and prioritizing targets for flagship missions like the James Webb Space Telescope.

The economic dimension matters as well. Automating detection and vetting reduces operational costs for space missions. Rather than maintaining large teams of human analysts, agencies can allocate resources to new instruments or deeper follow-up studies. This efficiency gain redirects human effort toward higher-value scientific questions.

Machine learning also accelerates the pace of discovery, compressing decades of manual analysis into months or weeks. This tempo enables time-sensitive science. If a microlensing event reveals a free-floating exoplanet, rapid ML classification allows immediate follow-up before the target moves out of view.

Perhaps most intriguingly, AI reveals patterns invisible to human perception. Ensemble methods combining multiple model architectures can identify subtle correlations between stellar properties and planetary occurrence rates, informing theories of planet formation. Neural networks might recognize new classes of exoplanets - orbital configurations or compositions we hadn't imagined - prompting theoretical physicists to explain these unexpected findings.

Despite the optimism, significant risks loom. Bias in training data represents a critical concern. If you train a model exclusively on hot Jupiters - massive planets orbiting close to their stars - it will excel at detecting hot Jupiters but might miss Earth-like worlds. Neural networks inherit the biases of their training sets, and exoplanet datasets skew heavily toward easy-to-detect planets.

Overfitting poses another danger. A model that memorizes training data rather than learning generalizable patterns will perform brilliantly on validation sets, but fail catastrophically when applied to new missions. Ensuring models generalize across missions requires careful design and cross-mission validation.

False confidence represents a subtler threat. A neural network might output a 98% probability that a signal is a planet, but that confidence reflects the model's internal uncertainty estimate, not absolute truth. If the model is systematically miscalibrated, astronomers might prioritize false leads, wasting precious follow-up resources.

The interpretability gap also complicates scientific discourse. When an algorithm flags a candidate, understanding why isn't always straightforward. This black-box nature conflicts with science's demand for transparency. If two research groups use different neural network architectures and reach different conclusions about the same light curve, resolving the disagreement becomes challenging.

Then there's the infrastructure question. Next-generation surveys will generate petabytes of data annually. Processing that volume demands massive computational resources - GPU clusters, high-speed data pipelines, sophisticated software engineering. Not every institution can afford this infrastructure, potentially recreating the elite bottleneck that ML was supposed to dissolve.

Finally, there's a human dimension. As automation advances, early-career astronomers who once cut their teeth on manual data analysis might lose that hands-on experience. If machine learning does all the detection work, will future scientists develop the intuition needed to design the next generation of algorithms?

Exoplanet research has always been international, but AI amplifies that collaboration in new ways. The European Space Agency's PLATO mission, planned for the 2030s, will monitor hundreds of thousands of stars, generating data streams that research groups across continents will analyze using machine learning pipelines developed collaboratively.

China's growing presence in space science includes ambitious exoplanet projects, and Chinese teams are publishing cutting-edge ML research. Japan's contributions to direct imaging benefit from homegrown AI expertise in computer vision. India's expanding astronomical infrastructure, coupled with a booming tech sector, positions the country as a major player in computational astrophysics.

This global participation raises questions about data access and equity. Will open-source ML models and publicly available datasets level the playing field, or will proprietary algorithms concentrate discovery power in wealthier nations? The International Astronomical Union is wrestling with these questions, advocating for open science principles.

Cultural perspectives on AI differ too. Silicon Valley optimism about technological disruption contrasts with European caution about algorithmic accountability and bias. East Asian research traditions emphasizing meticulous validation may approach black-box neural networks differently than North American labs focused on rapid iteration. These philosophical differences shape how global teams design, validate, and trust ML-driven discoveries.

Whether you're a professional astronomer, student, or enthusiast, pathways to engage with AI-powered exoplanet science abound. Citizen science projects host exoplanet classification tasks where volunteers label light curves to generate training data for neural networks. Your classifications directly improve model performance.

For those with programming skills, open-source repositories provide pre-trained models and datasets. You can download Kepler or TESS light curves, load a pre-trained network, and start exploring. Kaggle hosts competitions challenging data scientists to improve exoplanet detection algorithms.

Educational resources are proliferating. Online courses cover machine learning fundamentals applied to astronomy, often using exoplanet detection as a motivating example. Universities are integrating computational astrophysics into curricula, training the next generation to wield these tools fluently.

If you're a researcher in a related field - computer vision, signal processing, statistics - consider bringing your expertise to exoplanet science. The challenges are rich and the community welcoming. Domain transfer often sparks breakthroughs: techniques from speech recognition might solve a light curve denoising problem.

Policymakers and funding agencies also have a role. Supporting open-source tool development, investing in computational infrastructure for under-resourced institutions, and funding interdisciplinary collaborations will ensure that AI-driven exoplanet science benefits everyone. The discoveries we make in the coming decades will shape humanity's understanding of our place in the cosmos - that knowledge should be built collectively and shared universally.

The next decade promises transformative developments. TESS has already discovered thousands of candidates, and its extended mission will continue feeding data to ML pipelines. The James Webb Space Telescope is characterizing exoplanet atmospheres, generating spectroscopic datasets that neural networks will mine for biosignatures.

The Vera C. Rubin Observatory's Legacy Survey will commence soon, surveying the entire visible sky every few nights. Its transient alert stream - millions of events nightly - will stress-test ML systems at unprecedented scales. Success there will validate AI-driven astronomy for a generation of future surveys.

ESA's PLATO mission will specifically target Earth-analogs around Sun-like stars, combining photometric precision with long observation baselines. Machine learning vetting systems are being designed now to handle PLATO's expected candidate deluge, ensuring high-quality catalogs for follow-up.

Ground-based efforts aren't standing still either. The next generation of extremely large telescopes will revolutionize direct imaging and spectroscopic characterization. Machine learning will be essential for extracting planetary signals from the colossal datasets these instruments generate.

Beyond detection, AI will enable entirely new science. Models trained to predict planetary system architectures might guide our search toward stars most likely to host habitable worlds. Neural networks could simulate planet formation under diverse conditions, testing theoretical models against observational constraints.

And then there's the ultimate question: what will AI tell us about ourselves? As we discover thousands more planets, patterns will emerge about planetary occurrence rates, the frequency of Earth-like worlds, and the conditions necessary for habitability. Machine learning will be instrumental in extracting these patterns, informing our estimates of how common life might be in the universe. The answer to "Are we alone?" may well be computed by an algorithm scanning data from distant stars, revealing at last whether Earth is a cosmic anomaly or one world among countless others teeming with life.

The machines are looking up at the stars now, and they're seeing things we never could. In that partnership - human curiosity guided by artificial intelligence - we're taking the next great step in exploring the universe.

Ahuna Mons on dwarf planet Ceres is the solar system's only confirmed cryovolcano in the asteroid belt - a mountain made of ice and salt that erupted relatively recently. The discovery reveals that small worlds can retain subsurface oceans and geological activity far longer than expected, expanding the range of potentially habitable environments in our solar system.

Scientists discovered 24-hour protein rhythms in cells without DNA, revealing an ancient timekeeping mechanism that predates gene-based clocks by billions of years and exists across all life.

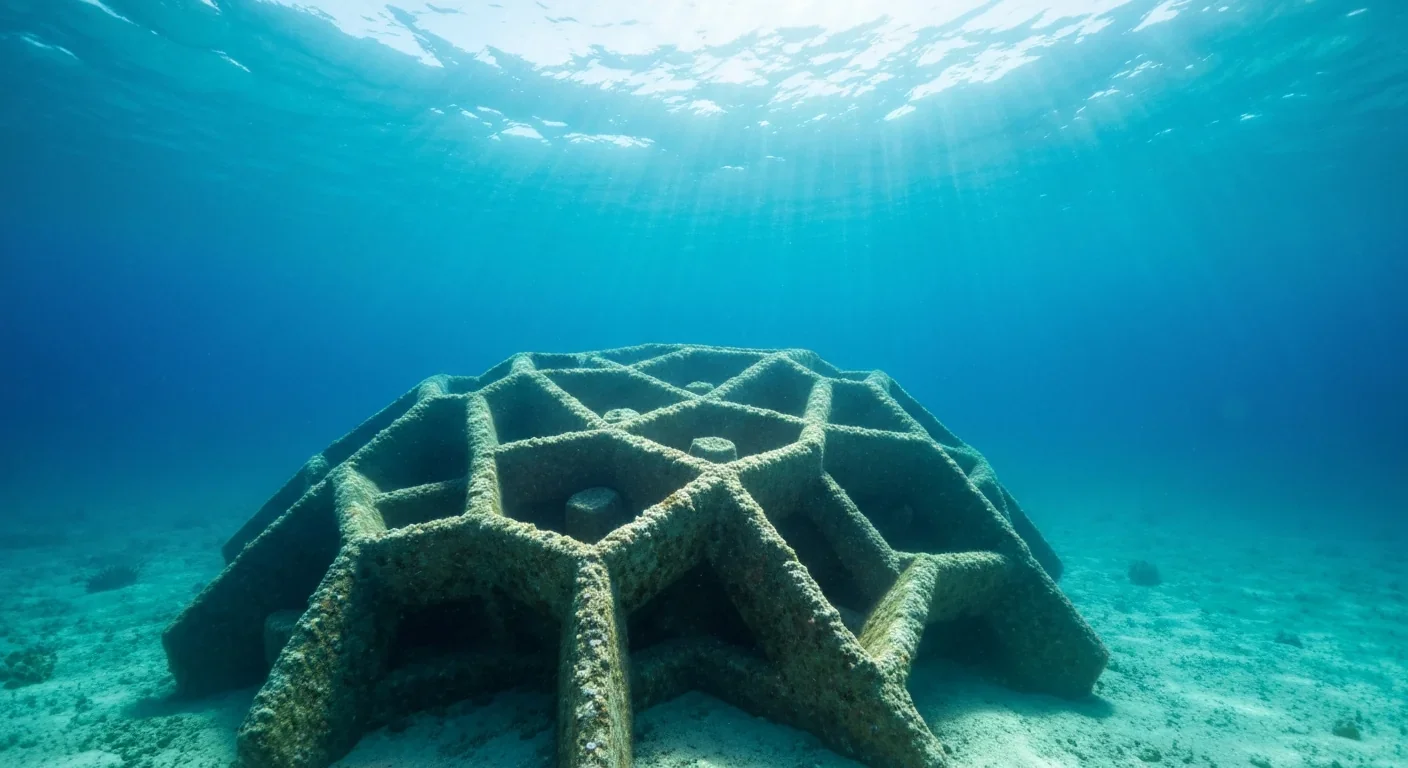

3D-printed coral reefs are being engineered with precise surface textures, material chemistry, and geometric complexity to optimize coral larvae settlement. While early projects show promise - with some designs achieving 80x higher settlement rates - scalability, cost, and the overriding challenge of climate change remain critical obstacles.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

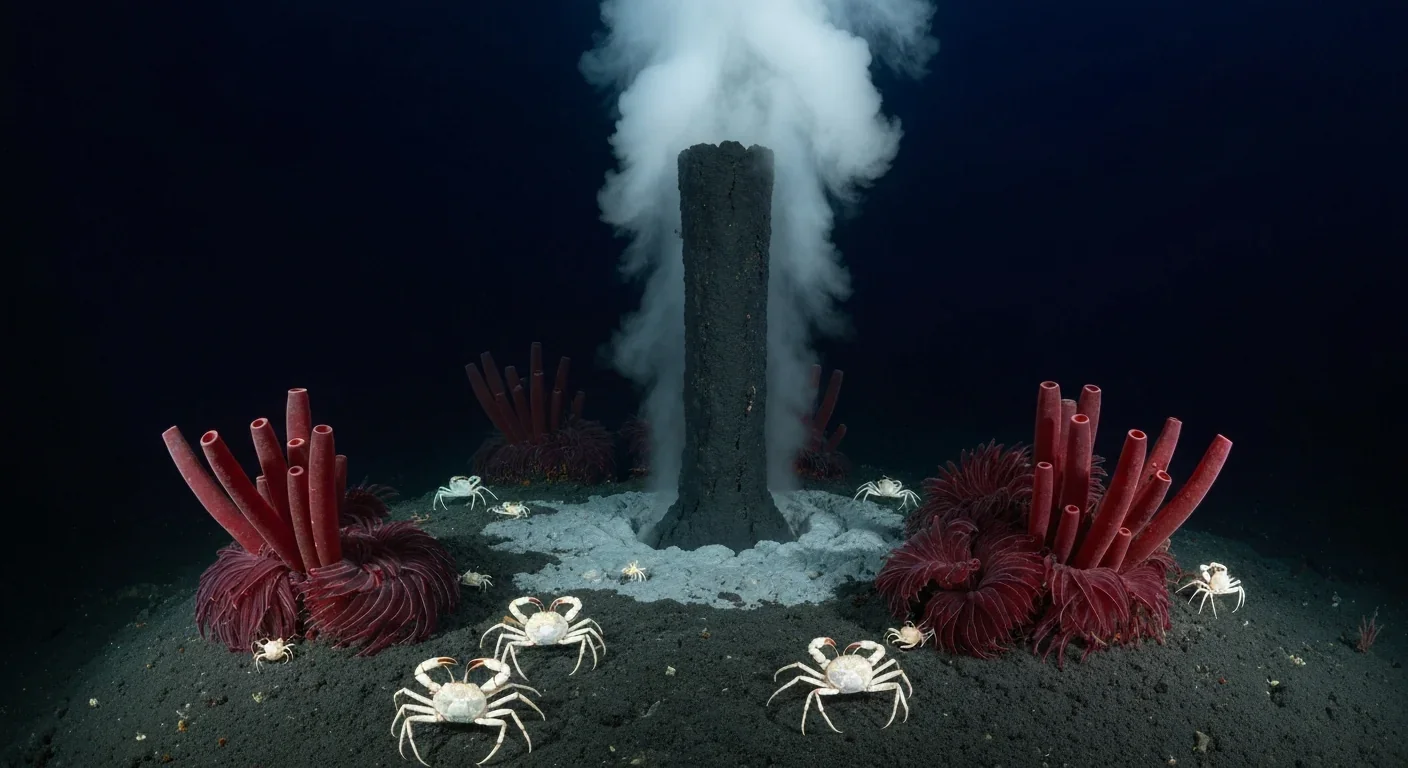

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Automated systems in housing - mortgage lending, tenant screening, appraisals, and insurance - systematically discriminate against communities of color by using proxy variables like ZIP codes and credit scores that encode historical racism. While the Fair Housing Act outlawed explicit redlining decades ago, machine learning models trained on biased data reproduce the same patterns at scale. Solutions exist - algorithmic auditing, fairness-aware design, regulatory reform - but require prioritizing equ...

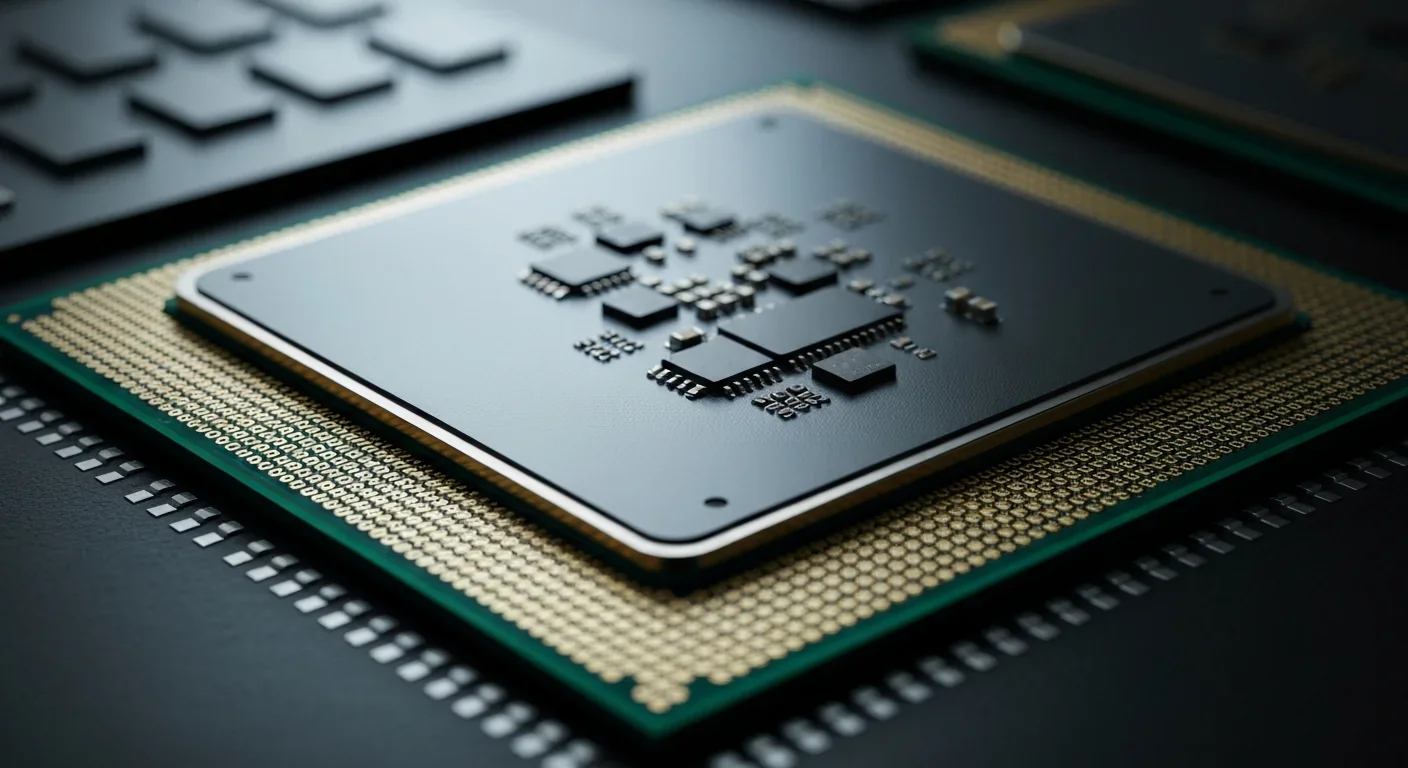

Cache coherence protocols like MESI and MOESI coordinate billions of operations per second to ensure data consistency across multi-core processors. Understanding these invisible hardware mechanisms helps developers write faster parallel code and avoid performance pitfalls.